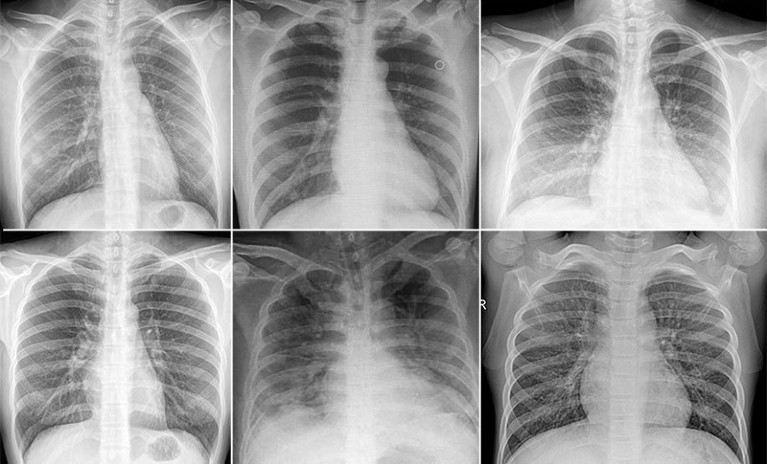

In the course of the COVID-19 pandemic in late 2020, testing kits for the viral an infection had been scant in some nations. So the thought of diagnosing an infection with a medical approach that was already widespread — chest X-rays — sounded interesting. Though the human eye can’t reliably discern variations between contaminated and non-infected people, a group in India reported that synthetic intelligence (AI) may do it, utilizing machine studying to analyse a set of X-ray photos1.

The paper — one among dozens of research on the thought — has been cited greater than 900 occasions. However the next September, pc scientists Sanchari Dhar and Lior Shamir at Kansas State College in Manhattan took a more in-depth look2. They skilled a machine-learning algorithm on the identical photos, however used solely clean background sections that confirmed no physique elements in any respect. But their AI may nonetheless select COVID-19 circumstances at effectively above probability stage.

The issue gave the impression to be that there have been constant variations within the backgrounds of the medical photos within the knowledge set. An AI system may decide up on these artefacts to achieve the diagnostic job, with out studying any clinically related options — making it medically ineffective.

Shamir and Dhar discovered a number of different circumstances wherein a reportedly profitable picture classification by AI — from cell varieties to face recognition — returned comparable outcomes from clean or meaningless elements of the pictures. The algorithms carried out higher than probability at recognizing faces with out faces, and cells with out cells. A few of these papers have been cited lots of of occasions.

Chest X-ray photos of wholesome individuals (left); these with COVID-19 (centre); and people with pneumonia (proper).Credit score: Wholesome and Pneumonia: D. Kermany et al./Cell (CC BY 4.0); COVID-19: E. M. Edrada et al./Trop. Med. Well being (CC BY 4.0).

“These examples is likely to be amusing”, Shamir says — however in biomedicine, misclassification might be a matter of life and dying. “The issue is extraordinarily widespread — much more widespread than most of my colleagues would need to imagine.” A separate evaluation in 2021 examined 62 research utilizing machine studying to diagnose COVID-19 from chest X-rays or computed tomography scans; it concluded that not one of the AI fashions was clinically helpful, due to methodological flaws or biases in picture knowledge units3.

The errors that Shamir and Dhar discovered are simply a number of the methods wherein machine studying can provide rise to deceptive claims in analysis. Pc scientists Sayash Kapoor and Arvind Narayanan at Princeton College in New Jersey reported earlier this 12 months that the issue of knowledge leakage (when there may be inadequate separation between the info used to coach an AI system and people used to check it) has triggered reproducibility points in 17 fields that they examined, affecting lots of of papers4. They argue that naive use of AI is resulting in a reproducibility disaster.

Machine studying (ML) and different varieties of AI are highly effective statistical instruments which have superior nearly each space of science by selecting out patterns in knowledge which can be usually invisible to human researchers. On the similar time, some researchers fear that ill-informed use of AI software program is driving a deluge of papers with claims that can’t be replicated, or which can be improper or ineffective in sensible phrases.

There was no systematic estimate of the extent of the issue, however researchers say that, anecdotally, error-strewn AI papers are in all places. “This can be a widespread situation impacting many communities starting to undertake machine-learning strategies,” Kapoor says.

Aeronautical engineer Lorena Barba at George Washington College in Washington DC agrees that few, if any, fields are exempt from the difficulty. “I’m assured stating that scientific machine studying within the bodily sciences is presenting widespread issues,” she says. “And this isn’t about a lot of poor-quality or low-impact papers,” she provides. “I’ve learn many articles in prestigious journals and conferences that examine with weak baselines, exaggerate claims, fail to report full computational prices, fully ignore limitations of the work, or in any other case fail to offer enough info, knowledge or code to breed the outcomes.”

“There’s a correct solution to apply ML to check a scientific speculation, and plenty of scientists had been by no means actually skilled correctly to try this as a result of the sphere continues to be comparatively new,” says Casey Bennett at DePaul College in Chicago, Illinois, a specialist in the usage of pc strategies in well being. “I see lots of widespread errors repeated again and again,” he says. For ML instruments utilized in well being analysis, he provides, “it’s just like the Wild West proper now.”

How AI goes astray

As with every highly effective new statistical approach, AI techniques could make it simple for researchers on the lookout for a selected consequence to idiot themselves. “AI supplies a software that enables researchers to ‘play’ with the info and parameters till the outcomes are aligned with the expectations,” says Shamir.

“The unimaginable flexibility and tunability of AI, and the shortage of rigour in growing these fashions, present means an excessive amount of latitude,” says pc scientist Benjamin Haibe-Kains on the College of Toronto, Canada, whose lab applies computational strategies to most cancers analysis.

Science and the brand new age of AI: a Nature particular

Knowledge leakage appears to be significantly widespread, based on Kapoor and Narayanan, who’ve laid out a taxonomy of such issues4. ML algorithms are skilled on knowledge till they will reliably produce the proper outputs for every enter — to appropriately classify a picture, say. Their efficiency is then evaluated on an unseen (check) knowledge set. As ML consultants know, it’s important to maintain the coaching set separate from the check set. However some researchers apparently don’t understand how to make sure this.

The problem will be delicate: if a random subset of check knowledge is taken from the identical pool because the coaching knowledge, that might result in leakage. And if medical knowledge from the identical particular person (or similar scientific instrument) are break up between coaching and check units, the AI would possibly study to establish options related to that particular person or that instrument, quite than a selected medical ailment — an issue recognized, for instance, in a single use of AI to analyse histopathology photos5. That’s why it’s important to run ‘management’ trials on clean backgrounds of photos, Shamir says, to see if what the algorithm is producing makes logical sense.

Kapoor and Narayanan additionally increase the issue of when the check set doesn’t replicate real-world knowledge. On this case, a way would possibly give dependable and legitimate outcomes on its check knowledge, however that may’t be reproduced in the true world.

“There may be far more variation in the true world than within the lab, and the AI fashions are sometimes not examined for it till we deploy them,” Haibe-Kains says.

In a single instance, an AI developed by researchers at Google Well being in Palo Alto, California, was used to analyse retinal photos for indicators of diabetic retinopathy, which might trigger blindness. When others within the Google Well being group trialled it in clinics in Thailand, it rejected many photos taken underneath suboptimal circumstances, as a result of the system had been skilled on high-quality scans. The excessive rejection price created a necessity for extra follow-up appointments with sufferers — an pointless workload6.

Might machine studying gas a reproducibility disaster in science?

Efforts to right coaching or check knowledge units can result in their very own issues. If the info are imbalanced — that’s, they don’t pattern the real-world distribution evenly — researchers would possibly apply rebalancing algorithms, such because the Artificial Minority Oversampling Approach (SMOTE)7, which generates artificial knowledge for under-sampled areas.

Nonetheless, Bennett says, “in conditions when the info is closely imbalanced, SMOTE will result in overly optimistic estimates of efficiency, since you are basically creating a lot of ‘pretend knowledge’ primarily based on an untestable assumption in regards to the underlying knowledge distribution”. In different phrases, SMOTE finally ends up not a lot balancing as manufacturing the info set, which is then pervaded with the identical biases which can be inherent within the unique knowledge.

Even consultants can discover it arduous to flee these issues. In 2022, as an example, knowledge scientist Gaël Varoquaux on the French Nationwide Institute for Analysis in Digital Science and Expertise (INRIA) in Paris and his colleagues ran a global problem for groups to develop algorithms that might make correct diagnoses of autism spectrum dysfunction from brain-structure knowledge obtained by magnetic resonance imaging (MRI)8.

The problem garnered 589 submissions from 61 groups, and the ten greatest algorithms (principally utilizing ML) appeared to carry out higher utilizing MRI knowledge in contrast with the prevailing technique of analysis, which makes use of genotypes. However these algorithms didn’t generalize effectively to a different knowledge set that had been saved personal from the general public knowledge given to groups to coach and check their fashions. “The very best predictions on the general public dataset had been too good to be true, and didn’t carry over to the unseen, personal dataset,” the researchers wrote8. In essence, it’s because growing and testing a way on a small knowledge set, even when making an attempt to keep away from knowledge leakage, will at all times find yourself overfitting to these knowledge, Varoquaux says — that’s, being too intently targeted on aligning to the actual patterns within the knowledge in order that the tactic loses generality.

Overcoming the issue

This August, Kapoor, Narayanan and their co-workers proposed a solution to deal with the difficulty with a guidelines of requirements for reporting AI-based science9, which runs to 32 questions on elements comparable to knowledge high quality, particulars of modelling and dangers of knowledge leakage. They are saying their record “supplies a cross-disciplinary bar for reporting requirements in ML-based science”. Different checklists have been created for particular fields, comparable to for the life sciences10 and chemistry11.

Many argue that analysis papers utilizing AI ought to make their strategies and knowledge absolutely open. A 2019 examine by knowledge scientist Edward Raff on the Virginia-based analytics agency Booz Allen Hamilton discovered that solely 63.5% of 255 papers utilizing AI strategies might be reproduced as reported12, however pc scientist Joelle Pineau at McGill College in Montreal, Canada (who can be vice-president of AI analysis at Meta) and others later said that reproducibility rises to 85% if the unique authors assist with these efforts by actively supplying knowledge and code13. With that in thoughts, Pineau and her colleagues proposed a protocol for papers that use AI strategies, which specifies that the supply code be included with the submission and that — as with Kapoor and Narayan’s suggestions — or not it’s assessed in opposition to a standardized ML reproducibility guidelines13.

However researchers observe that offering sufficient particulars for full reproducibility is tough in any computational science, not to mention in AI.

AI and science: what 1,600 researchers assume

And checklists can solely obtain a lot. Reproducibility doesn’t assure that the mannequin is giving right outcomes, however solely self-consistent ones, warns pc scientist Joaquin Vanschoren on the Eindhoven College of Expertise within the Netherlands. He additionally factors out that “lots of the actually high-impact AI fashions are created by large corporations, who seldom make their codes accessible, not less than instantly.” And, he says, generally individuals are reluctant to launch their very own code as a result of they don’t assume it’s prepared for public scrutiny.

Though some computer-science conferences require that code be made accessible to have a peer-reviewed proceedings paper revealed, this isn’t but common. “A very powerful conferences are extra severe about it, nevertheless it’s a blended bag,” says Vanschoren.

A part of the issue might be that there merely aren’t sufficient knowledge accessible to correctly check the fashions. “If there aren’t sufficient public knowledge units, then researchers can’t consider their fashions appropriately and find yourself publishing low-quality outcomes that present nice efficiency,” says Joseph Cohen, a scientist at Amazon AWS Well being AI, who additionally directs the US-based non-profit Institute for Reproducible Analysis. “This situation could be very unhealthy in medical analysis.”

The pitfalls is likely to be all of the extra hazardous for generative AI techniques comparable to massive language fashions (LLMs), which might create new knowledge, together with textual content and pictures, utilizing fashions derived from their coaching knowledge. Researchers can use such algorithms to reinforce the decision of photos, as an example. However except they take nice care, they might find yourself introducing artefacts, says Viren Jain, a analysis scientist at Google in Mountain View, California, who works on growing AI for visualizing and manipulating massive knowledge units.

“There was lots of curiosity within the microscopy world to enhance the standard of photos, like eradicating noise,” he says. “However I wouldn’t say this stuff are foolproof, they usually might be introducing artefacts.” He has seen such risks in his personal work on photos of mind tissue. “If we weren’t cautious to take the right steps to validate issues, we may have simply performed one thing that ended up inadvertently prompting an incorrect scientific conclusion.”

Jain can be involved about the potential of deliberate misuse of generative AI as a simple solution to create genuine-seeming scientific photos. “It’s arduous to keep away from the priority that we may see a larger quantity of integrity points in science,” he says.

Tradition shift

Some researchers assume that the issues will solely be actually addressed by altering cultural norms about how knowledge are offered and reported. Haibe-Kains shouldn’t be very optimistic that such a change shall be simple to engineer. In 2020, he and his colleagues criticized a distinguished examine on the potential of ML for detecting breast most cancers in mammograms, authored by a group that included researchers at Google Well being14. Haibe-Kains and his co-authors wrote that “the absence of sufficiently documented strategies and pc code underlying the examine successfully undermines its scientific worth”15 — in different phrases, the work couldn’t be examined as a result of there wasn’t sufficient info to breed it.

Three pitfalls to keep away from in machine studying

The authors of that examine mentioned in a broadcast response, nonetheless, that they weren’t at liberty to share all the knowledge, as a result of a few of it got here from a US hospital that had privateness issues with making it accessible. They added that they “strove to doc all related machine studying strategies whereas maintaining the paper accessible to a scientific and common scientific viewers”16.

Extra extensively, Varoquaux and pc scientist Veronika Cheplygina on the IT College of Copenhagen have argued that present publishing incentives, particularly the strain to generate attention-grabbing headlines, act in opposition to the reliability of AI-based findings17. Haibe-Kains provides that authors don’t at all times “play the sport in good religion” by complying with data-transparency tips, and that journal editors usually don’t push again sufficient in opposition to this.

The issue shouldn’t be a lot that editors waive guidelines about transparency, Haibe-Kains argues, however that editors and reviewers is likely to be “poorly educated on the true versus fictitious obstacles for sharing knowledge, code and so forth, so that they are usually content material with very shallow, unreasonable justifications [for not sharing such information]”. Certainly, authors would possibly merely not perceive what’s required of them to make sure the reliability and reproducibility of their work. “It’s arduous to be fully clear if you happen to don’t absolutely perceive what you might be doing,” says Bennett.

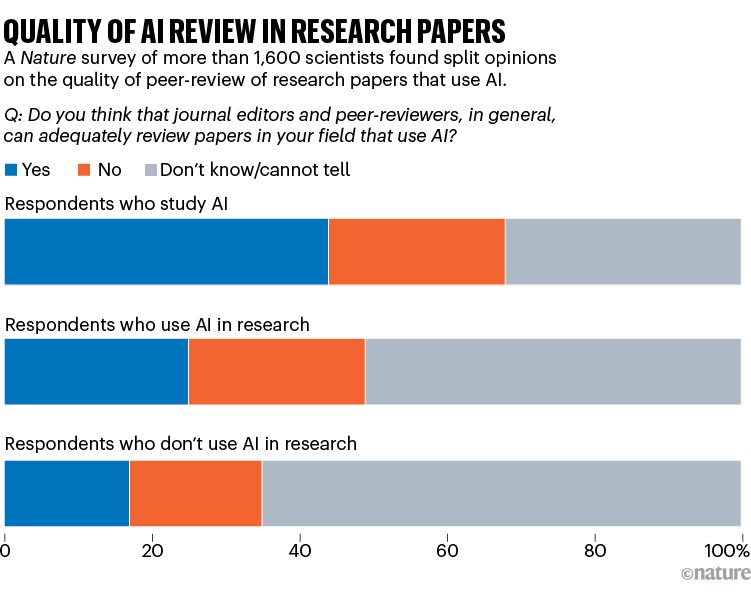

In a Nature survey this 12 months that requested greater than 1,600 researchers about AI, views on the adequacy of peer evaluation for AI-related journal articles had been break up. Among the many scientists who used AI for his or her work, one-quarter thought critiques had been satisfactory, one-quarter felt they weren’t and round half mentioned they didn’t know (see ‘High quality of AI evaluation in analysis papers’ and Nature 621, 672–675; 2023).

Supply: Nature 621, 672–675 (2023).

Though loads of potential issues have been raised about particular person papers, they hardly ever appear to get resolved. Particular person circumstances are inclined to get slowed down in counterclaims and disputes about high-quality particulars. For instance, in a number of the case research investigated by Kapoor and Narayanan, involving makes use of of ML to foretell outbreaks of civil struggle, a few of their claims that the outcomes had been distorted by knowledge leakage had been met with public rebuttals by the authors (see Nature 608, 250–251; 2022). And the authors of the examine on COVID-19 identification from chest X-rays1 critiqued by Dhar and Shamir instructed Nature that they don’t settle for the criticisms.

Studying to fly

Not everybody thinks there may be an AI disaster looming. “In my expertise, I’ve not seen the appliance of AI leading to a rise in irreproducible outcomes,” says neuroscientist Lucas Stetzik at Aiforia Applied sciences, a Helsinki-based consultancy for AI-based medical imaging. Certainly, he thinks that, rigorously utilized, AI methods may also help to remove the cognitive biases that always leak into researchers’ work. “I used to be drawn to AI particularly as a result of I used to be pissed off by the irreproducibility of many strategies and the convenience with which some irresponsible researchers can bias or cherry-pick outcomes.”

Though issues in regards to the validity or reliability of many revealed findings on the makes use of of AI are widespread, it isn’t clear that defective or unreliable findings primarily based on AI within the scientific literature are but creating actual risks of, say, misdiagnosis in scientific observe. “I feel that has the potential to occur, and I might not be shocked to search out out it’s already taking place, however I haven’t seen any such experiences but,” says Bennett.

Cohen additionally feels that the problems would possibly resolve themselves, simply as teething issues with different new scientific strategies have. “I feel that issues will simply naturally work out ultimately,” he says. “Authors who publish poor-quality papers shall be regarded poorly by the analysis neighborhood and never get future jobs. Journals that publish these papers shall be thought to be untrustworthy and good authors received’t need to publish in them.”

Bioengineer Alex Trevino on the bioinformatics firm Allow Drugs in Menlo Park, California, says that one key side of constructing AI-based analysis extra dependable is to make sure that it’s performed in interdisciplinary groups. For instance, pc scientists who perceive learn how to curate and deal with knowledge units ought to work with biologists who perceive the experimental complexities of how the info had been obtained.

Bennett thinks that, in a decade or two, researchers can have a extra refined understanding of what AI can supply and learn how to use it, a lot because it took biologists that lengthy to higher perceive learn how to relate genetic analyses to complicated ailments. And Jain says that, not less than for generative AI, reproducibility would possibly enhance when there may be larger consistency within the fashions getting used. “Individuals are more and more converging round basis fashions: very common fashions that do a lot of issues, like OpenAI’s GPT-3 and GPT-4,” he says. That’s more likely to present rise to reproducible outcomes than some bespoke mannequin skilled in-house. “So you can think about reproducibility getting a bit higher if everyone seems to be utilizing the identical techniques.”

Vanschoren attracts a hopeful analogy with the aerospace business. “Within the early days it was very harmful, and it took many years of engineering to make airplanes reliable.” He thinks that AI will develop in an identical means: “The sphere will change into extra mature and, over time, we are going to study which techniques we will belief.” The query is whether or not the analysis neighborhood can include the issues within the meantime.